I've noticed recently that several cable TV shows that talk about science, future science, even history are now showing a small circle with the letters "AI" on scenes showing re-enactments or predicted outcomes, maybe the entire entertainment industry (including social media) needs to adopt this idea as it would remove any doubts as to authenticity

-

Happy National Boy Scouts Day! 👦👧⛺

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ai bots threaten the digital canoeing world

- Thread starter Alan Gage

- Start date

the entire entertainment industry (including social media) needs to adopt this idea as it would remove any doubts as to authenticity

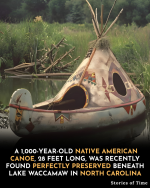

I agree, it is particularly disturbing when inaccurate AI generated images of the past are presented when correct or original images are readily available. The frequently shared one shown below is especially annoying to me,

Benson

Last edited:

I have found AI to be useful when researching legislation and legal history. Also for some scientific explanation or basic technical instructions. But it's important to remember that AI tends to hallucinate (just as bad as humans), so everything has to be read with a critical eye - especially with anything that might be political in nature. As for the bots, they currently have tells. My fear is that they'll get so good that the tells are no longer apparent.

Bingo, that’s the critical thing. Critical eye, critical thinking and reasoning skills. We’re preaching to the choir here because we’re all of an age where we learned before there was AI; my kids are growing up in a digital era where they aren’t necessarily learning to differentiate between Britannica and Wikipedia. They can’t spot trash when they see it. Their teachers? In many cases I’m old enough to be their parent too, so my confidence in their abilities is… not high.

I admit I haven't used dedicated AI interfaces like ChatGPT, but I've noticed the AI summaries that come with web searches these days are either patently wrong or of such low quality often enough that I scroll right past them instead of bothering to read them.I have found AI to be useful when researching legislation and legal history. Also for some scientific explanation or basic technical instructions. But it's important to remember that AI tends to hallucinate (just as bad as humans), so everything has to be read with a critical eye - especially with anything that might be political in nature. As for the bots, they currently have tells. My fear is that they'll get so good that the tells are no longer apparent.

- Joined

- Aug 10, 2018

- Messages

- 2,137

- Reaction score

- 7,200

I haven't messed w/ GPT but I've played around a little with the free version of Grammarly just to see what changes it might suggest (I always want to write better). The tech is developing rapidly but it's not quite there yet. Many of the suggestions that it made would change the context and a few were simply wrong (admittedly, that may be because it misinterpreted what I was trying to relate because I was not concise enough to begin with.)

At the same time, I've seen some truly stunning AI photos and believe that computers will eventually acquire the ability to pander to our tastes enough that they may take the place of human writers & photographers someday. Maybe leaving small grammatical errors within TRs could be a HQ (Human of Questionable Intelligence) tell at some point in the future.

At the same time, I've seen some truly stunning AI photos and believe that computers will eventually acquire the ability to pander to our tastes enough that they may take the place of human writers & photographers someday. Maybe leaving small grammatical errors within TRs could be a HQ (Human of Questionable Intelligence) tell at some point in the future.

I use Chat GPT quite a bit and it's almost completely replaced Google as my first source when looking for information. I'm good at searching Google to find the information I need but Ai is much better (most of the time). The more information available about a topic the better it does and while it can certainly get things wrong I'm very impressed overall. It's not uncommon that I still need to search Google for the final answer but Chap GPT usually gets me 95% of the way there so my Google searches are more targeted.

Most recently it's been giving me book recommendations. After some back and forth it seems to have figured out my tastes pretty well. It will give me half a dozen recommendations, which I read and then give feedback on, before giving me more.

I was switching road bike components from one bike frame to another and the rear derailleur would not mount correctly. I told Chat GPT what was happening and how the dimensions of the hanger differed. It explained what the problem was and told me there was an adapter available for my rear derailleur to adapt it. It gave me a part number, which was completely wrong, but it was enough info that a quick Google search turned up exactly what I needed. I could have found out all that information from Google but it would have taken much longer.

A few months ago I was looking for a used minivan to mostly use for traveling. I asked Chat GPT for a list of suitable vans and to list the specs I was most interested in (cargo length, cargo height, ride height, etc). It was able to quickly produce charts comparing not only different brands but also changes within the same model from year to year (what do I gain/lose by getting a 2020 instead of a 2017?) as well as showing the differences between trim levels. It turned into a very long conversation over the course of a month or so as we drilled down what was best for me and my budget. In the end I purchased a Ford Transit Connect that hadn't even been on my radar until Chat GPT suggested it as an option.

I also tried to give it wiring diagrams and asked it to explain system operation on specific vehicle systems (what I do for a living) and it failed dramatically. What it initially spit out looked very impressive but when paying close attention it was getting small but important details wrong. I'd tell it something was wrong and to double check, and it would often find the correction, only to make the same mistake again later. I asked it why it was getting it wrong and it admitted to using general knowledge (such as common relay pin numbers) rather than the specific information from the diagram and vehicle I gave it. It promised not to make those assumptions again but it went right back to old habits a few days later when I picked up the conversation again. These mistakes were obvious to me but could have been disastrous if someone just learning was given the same answers.

For anyone that's curious I recommend playing with it to see how it does. Ask it questions about subjects you're already familiar with so you can judge the quality of its content. That will also show you the best way to phrase questions to get the right information.

Alan

Most recently it's been giving me book recommendations. After some back and forth it seems to have figured out my tastes pretty well. It will give me half a dozen recommendations, which I read and then give feedback on, before giving me more.

I was switching road bike components from one bike frame to another and the rear derailleur would not mount correctly. I told Chat GPT what was happening and how the dimensions of the hanger differed. It explained what the problem was and told me there was an adapter available for my rear derailleur to adapt it. It gave me a part number, which was completely wrong, but it was enough info that a quick Google search turned up exactly what I needed. I could have found out all that information from Google but it would have taken much longer.

A few months ago I was looking for a used minivan to mostly use for traveling. I asked Chat GPT for a list of suitable vans and to list the specs I was most interested in (cargo length, cargo height, ride height, etc). It was able to quickly produce charts comparing not only different brands but also changes within the same model from year to year (what do I gain/lose by getting a 2020 instead of a 2017?) as well as showing the differences between trim levels. It turned into a very long conversation over the course of a month or so as we drilled down what was best for me and my budget. In the end I purchased a Ford Transit Connect that hadn't even been on my radar until Chat GPT suggested it as an option.

I also tried to give it wiring diagrams and asked it to explain system operation on specific vehicle systems (what I do for a living) and it failed dramatically. What it initially spit out looked very impressive but when paying close attention it was getting small but important details wrong. I'd tell it something was wrong and to double check, and it would often find the correction, only to make the same mistake again later. I asked it why it was getting it wrong and it admitted to using general knowledge (such as common relay pin numbers) rather than the specific information from the diagram and vehicle I gave it. It promised not to make those assumptions again but it went right back to old habits a few days later when I picked up the conversation again. These mistakes were obvious to me but could have been disastrous if someone just learning was given the same answers.

For anyone that's curious I recommend playing with it to see how it does. Ask it questions about subjects you're already familiar with so you can judge the quality of its content. That will also show you the best way to phrase questions to get the right information.

Alan

Last edited:

Indeed, I do like the idea of easier background research, a task I find to be a chore. I recently read 'How to Think About AI' in which the author points out that AI will only get better, so writing it off based on current capabilities is a fool's folly.

I still don't like how AI products that don't seem ready for primetime are getting rammed down my throat by big tech. And for some frightening reading, check out the book 'AI Snakeoil', which details some absolutely scary applications (medical diagnoses, legal rulings) of AI trained on really biased datasets.

But I recognize I'll probably have to get on board at some point if I want to stay employed.... I'm just putting off spending the time to get up to speed on using it in smart and effective ways. Hopefully in a couple yrs it will be better and I'll be ready to learn.

I still don't like how AI products that don't seem ready for primetime are getting rammed down my throat by big tech. And for some frightening reading, check out the book 'AI Snakeoil', which details some absolutely scary applications (medical diagnoses, legal rulings) of AI trained on really biased datasets.

But I recognize I'll probably have to get on board at some point if I want to stay employed.... I'm just putting off spending the time to get up to speed on using it in smart and effective ways. Hopefully in a couple yrs it will be better and I'll be ready to learn.

- Joined

- Nov 30, 2017

- Messages

- 1,167

- Reaction score

- 3,500

My experiences are much like Alan’s.I use Chat GPT quite a bit and it's almost completely replaced Google as my first source when looking for information. I'm good at searching Google to find the information I need but Ai is much better (most of the time). The more information available about a topic the better it does and while it can certainly get things wrong I'm very impressed overall. It's not uncommon that I still need to search Google for the final answer but Chap GPT usually gets me 95% of the way there so my Google searches are more targeted.

Most recently it's been giving me book recommendations. After some back and forth it seems to have figured out my tastes pretty well. It will give me half a dozen recommendations, which I read and then give feedback on, before giving me more.

I was switching road bike components from one bike frame to another and the rear derailleur would not mount correctly. I told Chat GPT what was happening and how the dimensions of the hanger differed. It explained what the problem was and told me there was an adapter available for my rear derailleur to adapt it. It gave me a part number, which was completely wrong, but it was enough info that a quick Google search turned up exactly what I needed. I could have found out all that information from Google but it would have taken much longer.

A few months ago I was looking for a used minivan to mostly use for traveling. I asked Chat GPT for a list of suitable vans and to list the specs I was most interested in (cargo length, cargo height, ride height, etc). It was able to quickly produce charts comparing not only different brands but also changes within the same model from year to year (what do I gain/lose by getting a 2020 instead of a 2017?) as well as showing the differences between trim levels. It turned into a very long conversation over the course of a month or so as we drilled down what was best for me and my budget. In the end I purchased a Ford Transit Connect that hadn't even been on my radar until Chat GPT suggested it as an option.

I also tried to give it wiring diagrams and asked it to explain system operation on specific vehicle systems (what I do for a living) and it failed dramatically. What it initially spit out looked very impressive but when paying close attention it was getting small but important details wrong. I'd tell it something was wrong and to double check, and it would often find the correction, only to make the same mistake again later. I asked it why it was getting it wrong and it admitted to using general knowledge (such as common relay pin numbers) rather than the specific information from the diagram and vehicle I gave it. It promised not to make those assumptions again but it went right back to old habits a few days later when I picked up the conversation again. These mistakes were obvious to me but could have been disastrous if someone just learning was given the same answers.

For anyone that's curious I recommend playing with it to see how it does. Ask it questions about subjects you're already familiar with so you can judge the quality of its content. That will also show you the best way to phrase questions to get the right information.

Alan

ChatGPT gives me more focused and specific answers than does google. I may have to sort through dozens of entries in google, whereas ChatGPT answers directly. Of course I have to read more or check sources if it is something critical, but I’d have to do that anyway.

I also can tell ChatGPT that an answer is wrong and it will try to correct itself, which may or may not be successful.

It has evolved markedly from a couple of years ago.

Wikipedia is still better at “encyclopedia” type answers such as a general inquiry on “Magdelan Islands.”

Obviously there will be many more opportunities for mischief and worse. I don’t know how anyone raised with AI will ever be able to distinguish between correct and incorrect answers.

I treat AI responses the same way I treat all crowd sourced or questionable answers to research, and stick to the old russian proverb- "Doveryai, no proveryai"- trust, but verify...

To be honest though, this is not a new problem, it goes all the way back to punch cards and magnetic tape- with any results it's often a case of "garbage in= garbage out". The only difference now is people's propensity to believe in that garbage and spread it around; One of the reasons I absolutely abhor search engines that feed you the "most popular result", not "the most logical result".

some days I wonder if we're cosplaying the movie "Idiocracy"

To be honest though, this is not a new problem, it goes all the way back to punch cards and magnetic tape- with any results it's often a case of "garbage in= garbage out". The only difference now is people's propensity to believe in that garbage and spread it around; One of the reasons I absolutely abhor search engines that feed you the "most popular result", not "the most logical result".

some days I wonder if we're cosplaying the movie "Idiocracy"

A current problem with AI engines is that companies have run out of data to input and wind up occasionally using data outputed by AI as new input, the errors contained therein are further corrupting the database and continuing to give incorrect answers. Hard to imagine they have input everything possible, but it seems that may be what’s happening. Thank heavens I’m old enough to have received some training in critical thinking and as mentioned before, I’m pretty nervous for the young people today who don’t seem to be receiving that kind of help.

Thank heavens I’m old enough to have received some training in critical thinking and as mentioned before, I’m pretty nervous for the young people today who don’t seem to be receiving that kind of help.

Let's not forget that scammers target the elderly rather than the young.

Alan

It's true. There are a lot of older folks getting taken by online scammers and social media disinformation. There's an advantage to being a digital native as well as to having been taught to think critically. Critical thinking abilities and speed can decline with age, too.Let's not forget that scammers target the elderly rather than the young.

Alan

Believe it or not, young people fall for digital scams more often than the elderly. There is data to support that.

Do tell. I'm always happy to learn and correct a misconception of mine.Believe it or not, young people fall for digital scams more often than the elderly. There is data to support that.

- Joined

- Aug 10, 2018

- Messages

- 2,137

- Reaction score

- 7,200

I dunno... A kid (25ish) at work got a call recently that "needed to verify some info due to a data breach".Let's not forget that scammers target the elderly rather than the young.

The imbecile gave them everything: Social Security number, banking info, birthdate... everything anyone would need to clean him out or assume his identity.

Granted, I've never considered him to be the brightest bulb in the chandelier but still...

Try this report:

www.ftc.gov

www.ftc.gov

Different targets, different scams.

One of AI's biggest issues that isn't talked about that much is the environmental damage that will be involved. AI server farms consume huge amounts of electricity (data processing) and massive amounts of water (cooling).

Who experiences scams? A story for all ages

Many people think scams mostly affect older adults. But reports to the FTC’s Consumer Sentinel tell a different story: anyone can be scammed.

Different targets, different scams.

One of AI's biggest issues that isn't talked about that much is the environmental damage that will be involved. AI server farms consume huge amounts of electricity (data processing) and massive amounts of water (cooling).

Tried using chatGPT to obtain recent info on freestyle canoeist Dana Grover who I bought my solo canoe from c. 1990. Dana is a male, but the bot gave me generic info about a female canoeist.

This is frustrating...a made up answer. Renews my distrust in AI.

BTW, if anyone reads this, have you heard anything about Dana? He lived in Illinois near Champaign, in the 1990s, and was active in the ACA in the early 2000s.

This is frustrating...a made up answer. Renews my distrust in AI.

BTW, if anyone reads this, have you heard anything about Dana? He lived in Illinois near Champaign, in the 1990s, and was active in the ACA in the early 2000s.

- Joined

- Nov 30, 2017

- Messages

- 1,167

- Reaction score

- 3,500

If ChatGPT doesn’t get your question, you need to provide more information. Apparently there are many Dana Grover’s in the world. Here is my question and answer:

My question: Dana Grover, a male canoe maker. Information please

ChatGPT said:

Good question. I found a few credible references to Dana Grover, though the available information is rather limited and his role isn’t as a traditional “canoe maker” (i.e., builder) but more on the business / sales / design side. Here’s what I uncovered, plus some context.

Who Is Dana Grover?

I also inquired about where he is now and ChatGPT does not know. A middle name or DOB would help. There are some obituaries but no way to find out if it is for this Dana Grover.

When ChatGPT does not understand the question, you just have to tell it what is wrong and it will fix it.

My question: Dana Grover, a male canoe maker. Information please

ChatGPT said:

Good question. I found a few credible references to Dana Grover, though the available information is rather limited and his role isn’t as a traditional “canoe maker” (i.e., builder) but more on the business / sales / design side. Here’s what I uncovered, plus some context.

Who Is Dana Grover?

- Sales Director at Sawyer Canoe

- According to a ProBoat magazine article, Dana Grover was (or is) the sales director at Sawyer Canoe, located in Oscoda, Michigan. ProBoat

- In that role, he was quoted about market trends, particularly emphasizing that older customers (e.g., in their 50s) were buying higher-end, lighter canoes. ProBoat

- He also noted that many single professional women were becoming a growing segment of buyers.

- ProBoat

- Designer / Innovator

- According to paddling-forum discussions, Dana Grover is credited with having developed the “Christie” turning design (or turning method) for canoes, particularly “in the mid-80s.” Paddling Forums

- The “Christie” turn is apparently associated with more maneuverable sit-and-switch (or delta) canoes. Paddling Forums

- Given this, he seems not just a salesperson but someone with technical influence on canoe design.

- Personality

- In ProBoat, he is described as “ebullient,” which suggests he was well-known for being enthusiastic, outgoing, and perhaps a strong advocate for canoeing and the product lines he represented.

I also inquired about where he is now and ChatGPT does not know. A middle name or DOB would help. There are some obituaries but no way to find out if it is for this Dana Grover.

When ChatGPT does not understand the question, you just have to tell it what is wrong and it will fix it.

To be honest though, this is not a new problem, it goes all the way back to punch cards and magnetic tape- with any results it's often a case of "garbage in= garbage out". The only difference now is people's propensity to believe in that garbage and spread it around;

Ain't that the trashy social media truth.

I've used Google's AI quite a bit, and some other one that's not ChatGPT. My experience has been mixed. Sometimes it does a fast and thorough job of answering questions and summarizing areas of knowledge, presumably when it has access to accurate databases that are on point.

Other times, it clearly doesn't know—and, worse, makes stuff up. I've interrogated it on legal questions and it gets a lot of them spectacularly wrong. It probably has no access to legal databases, and has difficulty in distinguishing how legal rules and results change depending on different underlying facts.

One time I spent about an hour chatting with AI about this site and myself. It got a lot correct, but just seemed to make a bunch of stuff up. I learned that I was a "leading advocate" of the "skim stroke." I have no idea what that is. Maybe I posted somewhere that skimming your paddle along the water surface on a recovery can be fun or sensual, but I don't recall. It also went on to describe my "other interests" such as running a refreshment stand with my wife, which is a total hallucination.

I skim the Google AI responses that appear, but I don't trust them. There's too much garbage and guessing mixed in with correct analyses of available, known facts, and it's often impossible to differentiate what's what without further searching and reading original sources myself.

That's one of the most important things I've learned in my academic, professional and personal life: Always go to original source material and read it yourself; don't trust someone else's, especially the media's, reporting of it. God help a world that relies on social media comments and tweets and clickbait headlines for news.

Similar threads

- Replies

- 21

- Views

- 2K

- Replies

- 22

- Views

- 9K